Cache is a special area of memory, managed by a cache controller, that improves performance by storing the contents of frequently accessed memory locations and their addresses. A memory cache and a disk cache are not the same. A memory cache is implemented in hardware and speeds up access to memory. A disk cache is software that improves hard-disk performance. When the processor references a memory address, the cache checks to see if it holds that address. If it does, the information is passed directly to the processor, so RAM access is not necessary. A cache can speed up operations in a computer whose RAM access is slow compared with its processor speed, because cache memory is always faster than normal RAM.

Cache is a special area of memory, managed by a cache controller, that improves performance by storing the contents of frequently accessed memory locations and their addresses. A memory cache and a disk cache are not the same. A memory cache is implemented in hardware and speeds up access to memory. A disk cache is software that improves hard-disk performance. When the processor references a memory address, the cache checks to see if it holds that address. If it does, the information is passed directly to the processor, so RAM access is not necessary. A cache can speed up operations in a computer whose RAM access is slow compared with its processor speed, because cache memory is always faster than normal RAM.There are several types of caches:

Direct-mapped cache: A location in the cache corresponds to several specific locations in memory, so when the processor calls for certain data, the cache can locate it quickly. However, since several blocks in RAM correspond to that same location in the cache, the cache may spend its time refreshing itself and calling main memory.

Fully associative cache: Information from RAM may be placed in any free blocks in the cache so that the most recently accessed data is usually present; however, the search to find that information may be slow because the cache has to index the data in order to find it.

Set-associative cache: Information from RAM is kept in sets, and these sets may have multiple locations, each holding a block of data; each block may be in any of the sets, but it will only be in one location within that set. Search time is shortened, and frequently used data are less likely to be overwritten. A set-associative cache may use two, four, or eight sets.

Disk Cache

An area of computer memory where data is temporarily stored on its way to or from a disk. When an application asks for information from the hard disk, the cache program first checks to see if that data is already in the cache memory. If it is, the disk cache program loads the information from the cache memory rather than from the hard disk. If the information is not in memory, the cache program reads the data from the disk, copies it into the cache memory for future reference, and then passes the data to the requesting application. This process is shown in the accompanying illustration. A disk cache program can significantly speed most disk operations. Some network operating systems also cache other often accessed and important information, such as directories and the file allocation table (FAT).

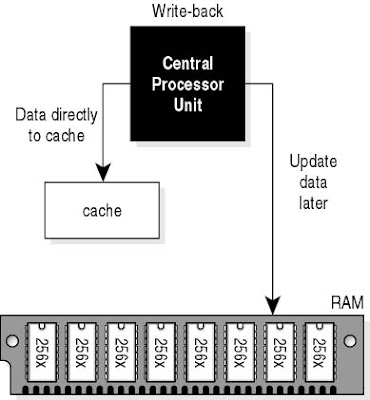

Write-back Cache

A technique used in cache design for writing information back into main memory. In a write-back cache, the cache stores the changed block of data, but only updates main memory under certain conditions, such as when the whole block must be overwritten because a newer block must be loaded into the cache or when the controlling algorithm determines that too much time has elapsed since the last update. This method is rather complex to implement, but is much faster than other designs.

Write-through Cache

A technique used in cache design for writing information back into main memory. In a write-through cache, each time the processor returns a changed bit of data to the cache, the cache updates that information in both the cache and in main memory. This method is simple to implement, but is not as fast as other designs; delays can be introduced when the processor must wait to complete write operations to slower main memory.

Why is the Cache Important?

The main reason cache is important is that it increases the real speed of a processor by providing the processor with data more quickly. A processor can only crank through data if it is being given data, and any delay that exists between when the processor requests data and when the processor receives it means that clock cycles are being left idle which could have otherwise been used.

That said, summing up the importance of cache in a general way is difficult to do. Cache performance has traditionally been ignored because until very recently it did not have a major impact. The cache for most Pentium 4 series processors, for example, was only 256 or 512 kilobytes, depending on the processor. Having some cache was useful, but it did not have major impact on application performance because there was not enough cache for applications to make use of. For example, the original Celeron had no cache integrated into the processor, but it still performed extremely well in games compared to processors which did include cache. This was partly because cache sizes were not large enough to hold more than extremely common and general sets of data and partly because games generally don't make much use of CPU cache because there the amount of frequently used data far exceeds what is available.

However, cache has become more important in modern processors thanks to better implementations and an increase in cache size. This increase in the important of cache is probably due to the problems that became evident in Intel's Pentium 4 line-up. The Pentium 4 processors relied heavily on high clock speeds, and as a result they were power-hungry and ran hot. Intel learned from this when it created the Core 2 Duo series, which used lower clock speeds but made up for it with multiple cores and a much larger cache. These days, it is not unusual to find a processor with an L2 cache of 8MB. Cache has become important because it gives Intel and AMD a way to increase the performance of their processors. Cache goes hand-in-hand with the trend towards processors with multiple cores. Both the Phenom II and the Core i7 possess larger cache sizes then the Phenom and the Core 2 architectures, and the trend of increasing cache size is likely to continue.

Labels:

Labels:

Subscribe to:

Subscribe to:

No comments:

Post a Comment