Specifications

Click this to Download Minitar MWGUHA Driver for Windows Vista (including Windows 98,ME,2000), Linux and Mac.

Read more...

The TP-LINK TL-WN322G Wireless USB Adapter gives you the flexibility to install the PC or notebook PC in the most convenient location available, without the cost of running network cables.

Its auto-sensing capability allows high packet transfer up to 54Mbps for maximum throughput, or dynamic range shifting to lower speeds due to distance or operating limitations in an environment with a lot of electromagnetic interference. It can also interoperate with all the 11Mbps wireless (802.11b) products. Your wireless communications are protected by up to 256-bit encryption, so your data stays secure.

Additionally, TL-WN322G supports Soft AP, which supports PSP connection, brings you joyful Online-gaming experience.

TP-Link TL-WN322G is a USB Wireless kit uses for desktop or notebook PC. Supports both 802.11b and 802.11g, 54/48/36/24/18/12/9/6/11/5.5/2/1Mbps wireless LAN data transfer rate, Ad-hoc and Infrastructure modes, and supports roaming between access points.

Click this to download TP-Link TL-WN322G Driver for Windows Vista / XP / 2000 / Me / 98

The architecture of a network defines the protocols and components necessary to satisfy application requirements. One popular standard for illustrating the architecture is the seven-layer Open System Interconnect (OSI) Reference Model, developed by the International Standards Organization (ISO). OSI specifies a complete set of network functions, grouped into layers, which reside within each network component. The OSI Reference Model is also a handy model for representing the various standards and interoperability of a wireless network.

The architecture of a network defines the protocols and components necessary to satisfy application requirements. One popular standard for illustrating the architecture is the seven-layer Open System Interconnect (OSI) Reference Model, developed by the International Standards Organization (ISO). OSI specifies a complete set of network functions, grouped into layers, which reside within each network component. The OSI Reference Model is also a handy model for representing the various standards and interoperability of a wireless network.

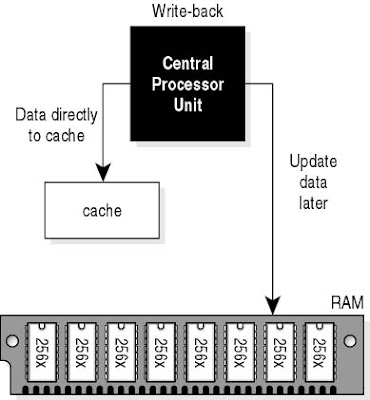

Cache is a special area of memory, managed by a cache controller, that improves performance by storing the contents of frequently accessed memory locations and their addresses. A memory cache and a disk cache are not the same. A memory cache is implemented in hardware and speeds up access to memory. A disk cache is software that improves hard-disk performance. When the processor references a memory address, the cache checks to see if it holds that address. If it does, the information is passed directly to the processor, so RAM access is not necessary. A cache can speed up operations in a computer whose RAM access is slow compared with its processor speed, because cache memory is always faster than normal RAM.

Cache is a special area of memory, managed by a cache controller, that improves performance by storing the contents of frequently accessed memory locations and their addresses. A memory cache and a disk cache are not the same. A memory cache is implemented in hardware and speeds up access to memory. A disk cache is software that improves hard-disk performance. When the processor references a memory address, the cache checks to see if it holds that address. If it does, the information is passed directly to the processor, so RAM access is not necessary. A cache can speed up operations in a computer whose RAM access is slow compared with its processor speed, because cache memory is always faster than normal RAM.

The Aspire 4535 provides entertainment enjoyment with high-def visuals and pleasurable surround sound. True cinematic 16:9 aspect ratio and 1366 x 768 pixel resolution generate widescreen, HD enjoyment of today's best entertainment and 8 ms high-def response time delivers high-quality moving images.

The Aspire 4535 provides entertainment enjoyment with high-def visuals and pleasurable surround sound. True cinematic 16:9 aspect ratio and 1366 x 768 pixel resolution generate widescreen, HD enjoyment of today's best entertainment and 8 ms high-def response time delivers high-quality moving images. The Ferrari 1100 takes ultra-portability into the fast lane. Driven by the revolutionary performance of dual-core processing power and wrapped in an exclusive, ultra-lightweight case, the Ferrari 1100 combines the unique style of race-bred innovation with the leading edge of mobile technology to bring your digital media to life wherever you are.

The Ferrari 1100 takes ultra-portability into the fast lane. Driven by the revolutionary performance of dual-core processing power and wrapped in an exclusive, ultra-lightweight case, the Ferrari 1100 combines the unique style of race-bred innovation with the leading edge of mobile technology to bring your digital media to life wherever you are. Microsoft Office 2010 (codename Office 14) is the next version of the Microsoft Office productivity suite for Microsoft Windows. It entered development during 2006 while Microsoft was finishing work on Microsoft Office 12, which was released as the 2007 Microsoft Office System.

Microsoft Office 2010 (codename Office 14) is the next version of the Microsoft Office productivity suite for Microsoft Windows. It entered development during 2006 while Microsoft was finishing work on Microsoft Office 12, which was released as the 2007 Microsoft Office System.

When you are doing business online, one thing that you have to be very aware of is the importance of a search engine like Google or Yahoo. When you think about the fact that a very high number of purchases are made directly after the use of a search engine, you will soon see why making sure that you have the attention of search engine as well as having high ranking on the page is necessary.

When you are doing business online, one thing that you have to be very aware of is the importance of a search engine like Google or Yahoo. When you think about the fact that a very high number of purchases are made directly after the use of a search engine, you will soon see why making sure that you have the attention of search engine as well as having high ranking on the page is necessary.

Jaringan nirkabel atau wireless network dapat dibagi menjadi dua segmen besar: jarak-pendek dan jarak-jauh. nirkabel jarak-pendek (short-range wireless networks) menyinggung beberapa jaringan yang memiliki luas terbatas. Beberapa diantaranya yaitu local area network (LAN) seperti gedung perusahaan, sekolah, kampus, rumah dan lain sebagainya, sama juga dengan personal area network atau PAN yaitu beberapa komputer portabel saling berhubungan dalam satu cakupan komunikasi. Tipikal jaringan-jaringan tersebut beroperasi dengan landasan spektrum tak berlisensi (unlincensed spectrum) yang hanya digunakan untuk keperluan industri, ilmiah dan medis atau ISM (Industrial, scientific, medical).

Jaringan nirkabel atau wireless network dapat dibagi menjadi dua segmen besar: jarak-pendek dan jarak-jauh. nirkabel jarak-pendek (short-range wireless networks) menyinggung beberapa jaringan yang memiliki luas terbatas. Beberapa diantaranya yaitu local area network (LAN) seperti gedung perusahaan, sekolah, kampus, rumah dan lain sebagainya, sama juga dengan personal area network atau PAN yaitu beberapa komputer portabel saling berhubungan dalam satu cakupan komunikasi. Tipikal jaringan-jaringan tersebut beroperasi dengan landasan spektrum tak berlisensi (unlincensed spectrum) yang hanya digunakan untuk keperluan industri, ilmiah dan medis atau ISM (Industrial, scientific, medical). 2. Spread spectrum

2. Spread spectrum 3. Orthogonal Frequency Division Multiplexing (OFDM)

3. Orthogonal Frequency Division Multiplexing (OFDM) Windows Azure, codenamed “Red Dog” is a cloud services operating system that serves as the development, service hosting and service management environment for the Azure Services Platform. It was launched by Microsoft in 2008. It is currently in Community Technology Preview. Commercial availability for Windows Azure will likely be at the end of calendar year 2009.

Windows Azure, codenamed “Red Dog” is a cloud services operating system that serves as the development, service hosting and service management environment for the Azure Services Platform. It was launched by Microsoft in 2008. It is currently in Community Technology Preview. Commercial availability for Windows Azure will likely be at the end of calendar year 2009.

Subnetwork is a logical division of a local area network, which is created to improve performance and provide security. It describes networked computers and devices that have a common, designated IP address routing prefix. Subnetting is used to break the network into smaller more efficient subnets to prevent excessive rates of Ethernet packet collision in a large network. To enhance performance, subnets limit the number of nodes that compete for available bandwidth and such subnets can be arranged hierarchically, into a tree-like structure. Routers are used to manage traffic and constitute borders between subnets. In an IP network, the subnet is identified by a subnet mask, which is a binary pattern that is stored in the client machine, server or router.

Subnetwork is a logical division of a local area network, which is created to improve performance and provide security. It describes networked computers and devices that have a common, designated IP address routing prefix. Subnetting is used to break the network into smaller more efficient subnets to prevent excessive rates of Ethernet packet collision in a large network. To enhance performance, subnets limit the number of nodes that compete for available bandwidth and such subnets can be arranged hierarchically, into a tree-like structure. Routers are used to manage traffic and constitute borders between subnets. In an IP network, the subnet is identified by a subnet mask, which is a binary pattern that is stored in the client machine, server or router. The Windows Registry is one of the most important parts of a computer. It stores information about the system - hardware, operating system software, user settings, and other software. Without the Registry your computer wouldn’t run.

The Windows Registry is one of the most important parts of a computer. It stores information about the system - hardware, operating system software, user settings, and other software. Without the Registry your computer wouldn’t run. Antivirus Software is a computer program designed to eliminate computer viruses and other malware. Nowadays, antivirus scan programs have the ability to neutralize all kinds of threats including worms, Trojan horses, etc and serve as a catalyst in virus and spyware removal.

Antivirus Software is a computer program designed to eliminate computer viruses and other malware. Nowadays, antivirus scan programs have the ability to neutralize all kinds of threats including worms, Trojan horses, etc and serve as a catalyst in virus and spyware removal. New cards means another new round in the ongoing multi-GPU battle.

New cards means another new round in the ongoing multi-GPU battle.

Viewed this way, CrossFire - as AMD's multi-card tech is known - makes more sense than the competing SLI. After all, why take two NVIDIA cards into the shower when one will do? A single GTX 280 will easily outperform anything else on the market without needing to be paired up. On the other hand, anyone who bought a GeForce 8800GT last year - and there were loads of us - will surely be watching as the price for a suitable partner tumbles.

While it's still being presented as a revolutionary idea, we're used to hardware zerging like this now. Indeed, it's a cheeky move by AMD to claim for its own the territory that NVIDIA first broached with SLI, all those years ago. The question is, with single CPUs getting ever more powerful and games engines standing relatively still, is this so much smoke and marketing mirrors?

Both companies use similar techniques to get their cards working together in harmony. Games are -wherever possible - profiled for the best possible performance increases. By default, the drivers use Alternate Frame Rendering (AFR), where one card is used to render one frame, while the other card prepares the next frame. In rarer cases, split-frame rendering - where pixels from a single frame are load balanced between the two cards - will make a game run faster.

Some competition gamers swear by split-frame rendering, arguing that the minor latency introduced in AFR can affect fast-paced games, but for most of us the drivers will simply select AFR and we won't be any the wiser. Indeed, with AMD's control panel you won't have any choice; but while you can customize profiles for NVIDIA cards, it's unlikely you'll ever need to.

Both companies, too, require a hardware bridge to connect the cards together using internal connectors inside the PC. This gives a direct point of communication between the cards independent of the potentially congested PCI Express bus, but isn't fast enough to carry all the data they need to share. So, are you better off going for the very best single GPU card you can lay your hands on, or should you look for a more arcane arrangement of graphics chips? And if you do, should you opt for SLI or CrossFire?

Back to Basics

A superficial glance back at the last 12 months and the answer would seem to favor multi-GPU arrays. NVIDIA's 9800GX2 - two G92 chips on one card - reigned supreme in the performance stakes up until the launch of the GTX 280. By coupling two GX2s together you got the Quake-like power-up of Quad SLI, and framerates that would make your eyes bleed.

AMD, meanwhile, stuck to its guns and released the HD3870X2, a dual-chip version of its highest-end card. In the same kind of performance league as a vanilla 9800GTX, it may not have been elegant but it was great value for money.

That's just the off-the-shelf packages. With the right motherboard two, three or even - in AMD's case - four similar cards can be combined to create varying degrees of gaming power. AMD also had a paper advantage with the fact that HD3850s and HD3870s could be combined together in configurations of up to four cards too.

Both companies even went as far as to release Hybrid SLI and Hybrid CrossFire, matching a low-end integrated graphics chip with a low-end discrete graphics chip. The result in both was much less than the sum of their parts: two rubbish GPUs which, when combined, were still poor for gaming.

And right there, at the very bottom, is where the argument for multi-GPU graphics starts to steadily unravel. Despite all the time that's passed since SLI first reappeared, the law of diminishing returns on additional graphics cards remains. Unfortunately, two cards are not twice as fast as one card, and adding a third card will often increase performance by mere single figure percentages.

That, of course, is if they work together at all. Even now, anyone going down the multiple graphics route is going to spend a lot more time installing and updating drivers to get performance increases in their favorite games. Most infamously, Crysis didn't support dual-GPUs until months after its release, and even then it still required a hefty patch from the developers to get two cards to play nicely together. It's now legend that the one game that could really have benefited from a couple of graphics cards refused point blank to make use of them.

That's very bad news for owners - or prospective owners - of GX2 or X2 graphics cards, which require SLI or CrossFire drivers; so another strike then for the single card. It would be churlish to say things haven't improved at all recently, but suffice to say that in the course of putting together this feature, we had to re-benchmark everything three times more than is normally necessary, because driver issues had thrown up curious results.

Before you even get to installing software, though, there's a bucket load of other considerations to take into account. First and foremost is your power supply: people looking to bag a bargain by linking together two lower end cards will often find that they will have to spend another $l00 or so on a high-quality power supply that's capable of not only putting out enough juice for their system, but has enough six or eight pin molex connectors for all the graphics cards, too.

Many is the upgrader who's witnessed the dreaded 'blue flash of death' when the PC equivalent of St Elmo's Fire indicates that the $30 power supply that you thought was a bargain is, in fact, destined for a quick trip to the recycling centre.

Even more critical with the current generation of cards, though, is heat dissipation. All of AMD's HD4800 series can easily top 90°C under load, and a couple of cards in your PC will challenge any amount of airflow you've painstakingly designed for. To make matters even worse, many motherboards stack their PCI-Express ports so closely together the heatsinks are almost touching. The HD3850s are single slot cards, but that means they vent all their heat inside the case.

On the NVIDIA side of things, size is more of an issue. The new cards - both GTX 260 and GTX 280 - are enormous. Even though they're theoretically able to couple up on existing motherboards, it's unlikely you'll find one with absolutely nothing at all - not even a capacitor - protruding between the CPU socket and the bottom of the board.

Because the merest jumper out of place will prevent these two sitting comfortably. If all this is beginning to sound a little cynical, let's point out that there have been bright developments in recent history. Most notable is the introduction last year of PCI-Express 2.0, which means there are more motherboards out there with at least two PCI-e sockets that are fully l6x bandwidth , so it's easier to keep both cards fed full of game data at all times.

, so it's easier to keep both cards fed full of game data at all times.

Even though switches and hubs are both used to link the computers together in a network; a switch is known to be more expensive and the network that is built with switches is typically said to be a bit faster than one that is rather built with hubs. The reason for this is because once a hub gets its data at one of the computer ports on the network, it will then transmit the chunk of data to all of the ports, before distributing it to all of the other computers on that network. If more than one computer on a single network tries to send a packet of data at the same time it will cause a collision and an error will occur.

Even though switches and hubs are both used to link the computers together in a network; a switch is known to be more expensive and the network that is built with switches is typically said to be a bit faster than one that is rather built with hubs. The reason for this is because once a hub gets its data at one of the computer ports on the network, it will then transmit the chunk of data to all of the ports, before distributing it to all of the other computers on that network. If more than one computer on a single network tries to send a packet of data at the same time it will cause a collision and an error will occur.When there is an error in the network all of the computers will have to go through a procedure in order to resolve the problem. It is quite a procedure as the entire process will have to be prescribed by the CSMA/CD (Ethernet Carrier Sense Multiple Access with Collision Detection). Every single Ethernet Adapter there is has their own transmitter and receiver, and if the adapters weren't required to listen using their receivers for collisions, then they would have been able to send the information while they are receiving it. But because they can only do one at a time, not more than one chunk of data can be sent out at once, which can slow the whole process down.

Due to the fact that they operate at only a half duplex, meaning that the data may only flow one way at a time, the hub broadcasts the data from one computer to all the others. Therefore the most bandwidth is 100 MHz, which is bandwidth that is to be shared by all of the computers that are connected within the network. Then, as a result of this, when someone making use of a PC on the hub wants to download a big file or more than one file from another PC, the network will then become crowded. With a 10 MHz 10 Base-T type of network, the effect here is to slow down the network to a crawl.

If you want to connect two computers, you can do so directly in an Ethernet using a crossover cable. With one of these crossover cables you will not have a problem with collision. What it does is it hardwires the transmitter of the Ethernet on the one PC to the receiver on the other PC. Most of the 100 Base-TX Ethernet adapters are able to detect when looking out for certain collisions that it is not necessary by using a system called the auto-negotiation, and it will run in a complete duplex manner when it's needed.

What this ends in is a crossover cable that doesn't have any delays that would be caused by collisions, and the data can be directed in both ways at the same time. The maximum bandwidth allowed is 200 Mbps, meaning 100 mbps either way.

Read more... Most of us use a Windows operating system to run our computers, and one of the most basic of all the systems in the operating system is the registry. A Windows registry is the database where all your files needed to run the computer are stored.

Most of us use a Windows operating system to run our computers, and one of the most basic of all the systems in the operating system is the registry. A Windows registry is the database where all your files needed to run the computer are stored. The Recycle Bin is a great feature of Windows, but it is very difficult to customize the name. Unlike other system icons on the desktop, you cannot just right-click it and select Rename as usual.

The Recycle Bin is a great feature of Windows, but it is very difficult to customize the name. Unlike other system icons on the desktop, you cannot just right-click it and select Rename as usual. Now your Recycle Bin name has changed! :)

Now your Recycle Bin name has changed! :)

Akhir-akhir ini banyak sekali virus dan worm yang cepat sekali menyebar melalui media penyimpanan dan jaringan. Khususnya dalam media penyimpanan, virus/worm mudah sekali menginfeksi beberapa komputer melalui media tersebut semisal Flash Disk dengan bantuan fasilitas AutoRun pada sistem komputer tersebut. Nah, untuk mencegah virus tersebut menginfeksi komputer kita pada saat flash disk dipasang, maka fitur AutoRun harus dimatikan.

Akhir-akhir ini banyak sekali virus dan worm yang cepat sekali menyebar melalui media penyimpanan dan jaringan. Khususnya dalam media penyimpanan, virus/worm mudah sekali menginfeksi beberapa komputer melalui media tersebut semisal Flash Disk dengan bantuan fasilitas AutoRun pada sistem komputer tersebut. Nah, untuk mencegah virus tersebut menginfeksi komputer kita pada saat flash disk dipasang, maka fitur AutoRun harus dimatikan.

Subscribe to:

Posts (Atom)

Subscribe to:

Posts (Atom)